语义分割算法之DeepLabV3+

论文标题: Encoder-Decoder with Atrous Separable Convolution for Semantic Image

Segmentation

DeepLab series has come along for versions from DeepLabv1 (2015 ICLR), DeepLabv2 (2018 TPAMI), and DeepLabv3 (arXiv).

论文地址: https://arxiv.org/pdf/1802.02611.pdf

github: https://github.com/jfzhang95/pytorch-deeplab-xception

语义分割主要面临两个问题,第一是物体的多尺度问题,第二是DCNN的多次下采样会造成特征图分辨率变小,导致预测精度降低,边界信息丢失。DeepLab V3设计的ASPP模块较好的解决了第一个问题,而这里要介绍的DeepLabv3+则主要是为了解决第2个问题的。 我们知道从DeepLabV1系列引入空洞卷积开始,我们就一直在解决第2个问题呀,为什么现在还有问题呢?见以前的博客:deeplabv1和deeplabv2和deeplabv3-空洞卷积(语义分割)。对于DeepLabV3,如果Backbone为ResNet101,Stride=16将造成后面9层的特征图变大,后面9层的计算量变为原来的4倍大。而如果采用Stride=8,则后面78层的计算量都会变得很大。这就造成了DeepLabV3如果应用在大分辨率图像时非常耗时。所以为了改善这个缺点,DeepLabV3+来了。

Overview

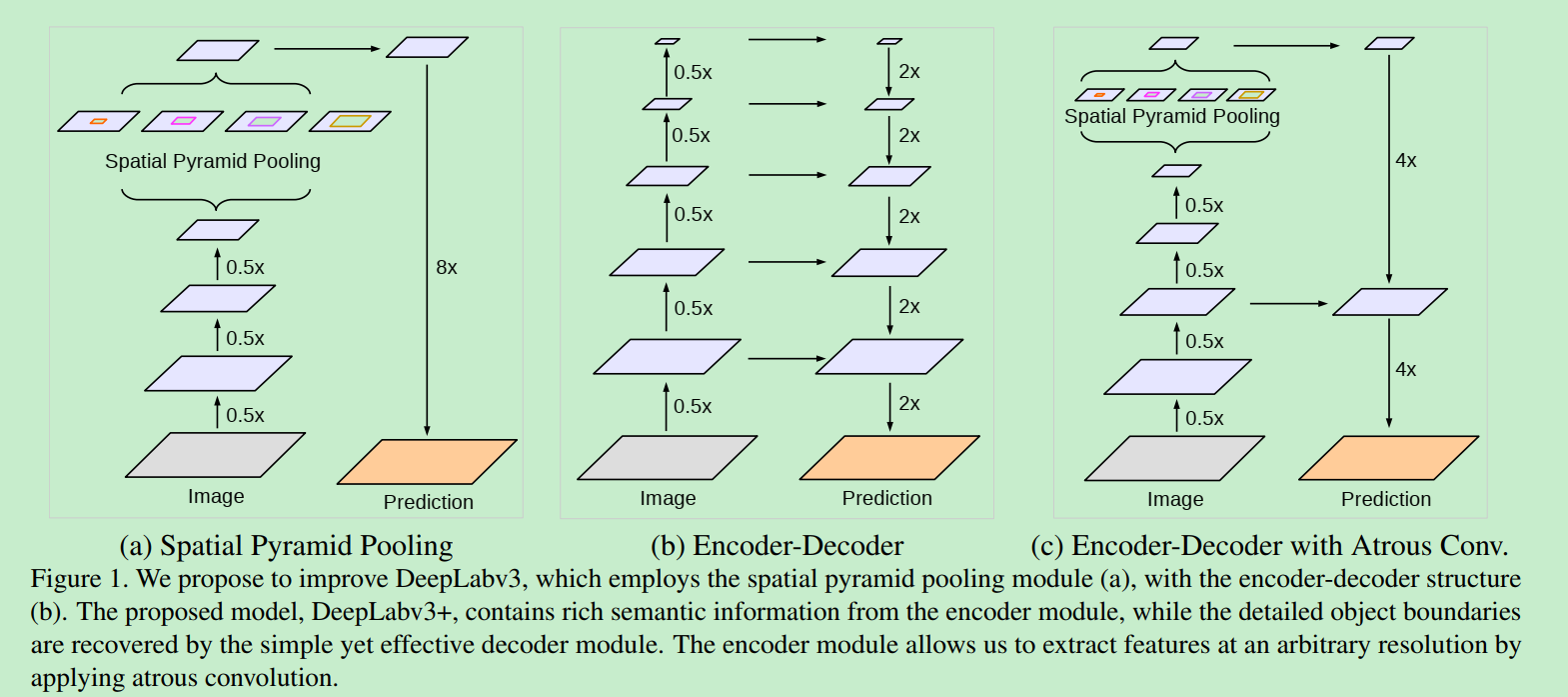

(a): With Atrous Spatial Pyramid Pooling (ASPP), able to encode multi-scale contextual information. ASPP 模块,可以编码多尺度特征, 其中的8x是直接双线性插值操作,不用参与训练 。

(b): With Encoder-Decoder Architecture, the location/spatial information is recovered. Encoder-Decoder Architecture has been proved to be useful in literature such as FPN, DSSD, TDM, SharpMask, RED-Net, and U-Net for different kinds of purposes. 编解码器结构, 可以恢复位置/空间信息。 事实证明,编码器/解码器体系结构在FPN,DSSD,TDM,SharpMask,RED-Net和U-Net等文献中可用于多种用途。 融合了低层和高层的信息。

(c): DeepLabv3+ makes use of (a) and (b). 本文使用的DeeplabV3+结构。采用了(a)和(b)。

Further, with the use of Modified Aligned Xception, and Atrous Separable Convolution, a faster and stronger network is developed. 此外,通过使用修正的对齐Xception和Atrous可分离卷积,可以开发出更快,更强大的网络。

最后,DeepLabv3 +的性能优于PSPNet(在2016年ILSVRC场景解析挑战赛中排名第一)和之前的DeepLabv3。

OutLine

- Atrous Separable Convolution

- Encoder-Decoder Architecture

- Modified Aligned Xception

- Ablation Study

- Comparison with State-of-the-art Approaches

Atrous Separable Convolution

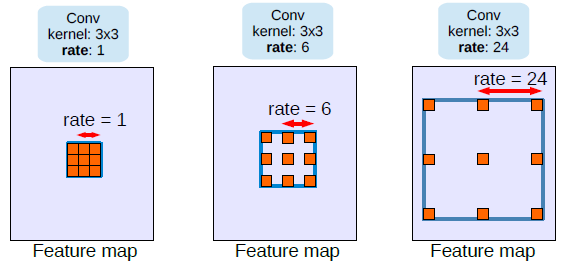

Atrous Convolution

- 对于输出y上的每个位置i和一个滤波器w, atrous卷积应用于输入特征映射x,其中atrous rate对应于我们采样输入信号时的步幅。

- 空洞卷积详见: https://hongliangzhu.cn/2020/04/09/deeplabv3-%E7%A9%BA%E6%B4%9E%E5%8D%B7%E7%A7%AF-%E8%AF%AD%E4%B9%89%E5%88%86%E5%89%B2/#%E7%A9%BA%E6%B4%9E%E5%8D%B7%E7%A7%AF

Atrous Separable Convolution

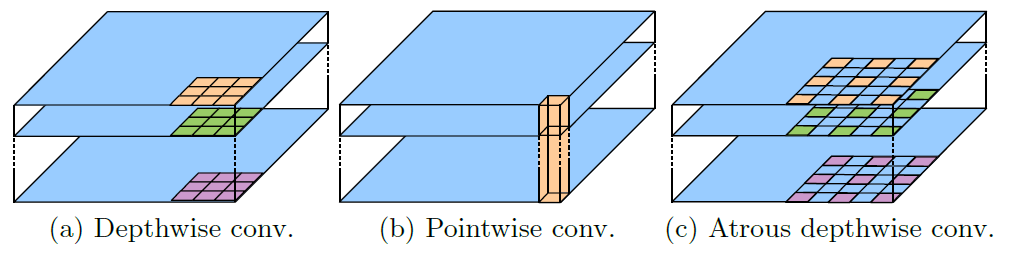

- (a)和(b),深度可分卷积:将标准卷积分解为深度卷积,然后再进行点向卷积(即1×1卷积),大大降低了计算复杂度。

- (c): 它在保持相似(或更好)性能的同时,大大降低了所提出模型的计算复杂度。

- Combining with point-wise convolution, it is Atrous Separable Convolution.

Encoder-Decoder Architecture

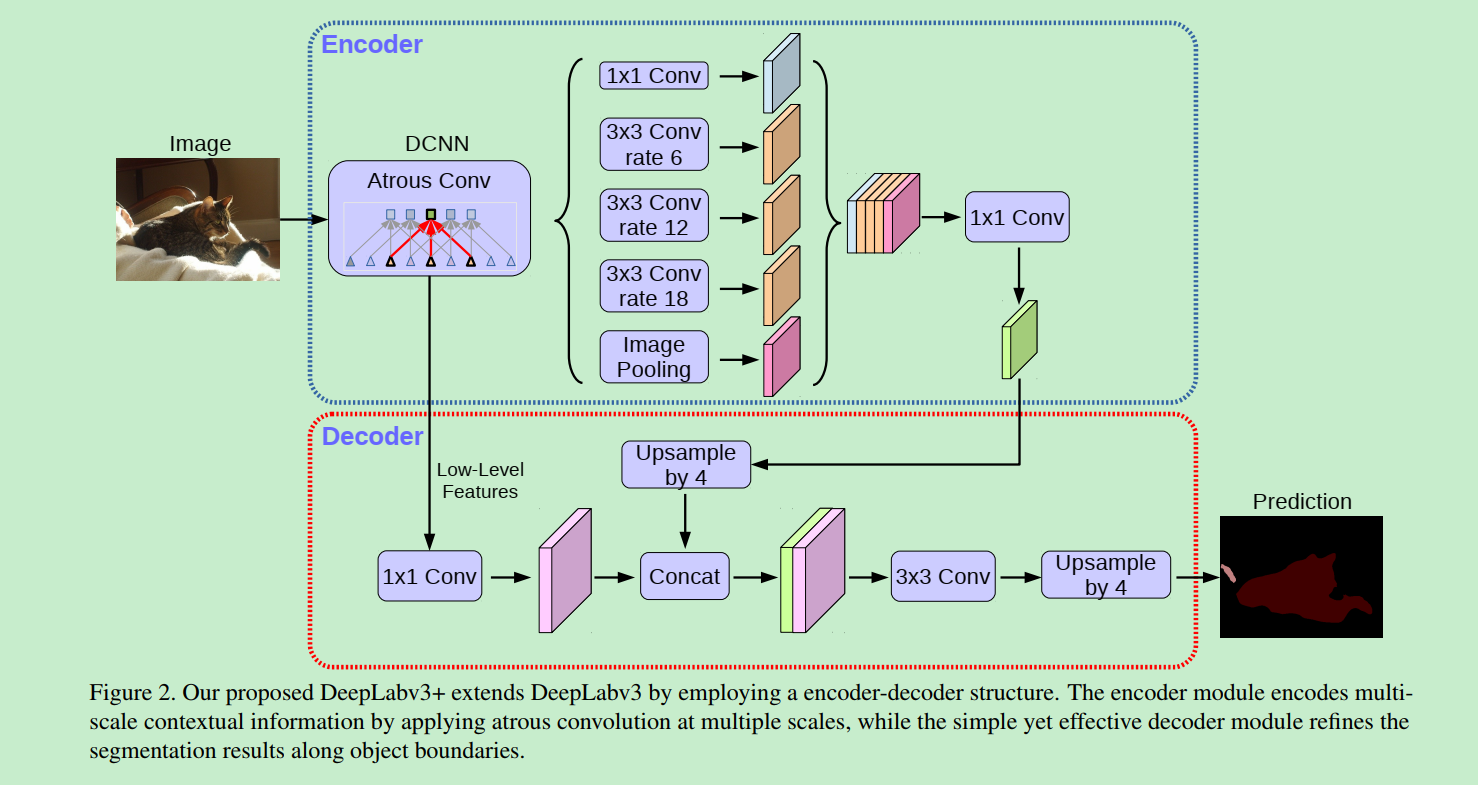

为了解决上面提到的DeepLabV3在分辨率图像的耗时过多的问题,DeepLabV3+在DeepLabV3的基础上加入了编码器。这是Deeplabv3+的一个主要的创新点。

DeepLabv3 as Encoder

编码器就是一个DeeplabV3结构。 首先选一个低层级的feature用1 * 1的卷积进行通道压缩(原本为256通道,或者512通道),目的是减少低层级的比重。论文认为编码器得到的feature具有更丰富的信息,所以编码器的feature应该有更高的比重。 这样做有利于训练。

- 对于图像分类的任务来说, 最终特征图的空间分辨率通常比输入图像分辨率小32倍,因此输出步幅= 32。 (output stride = 32)

- 对于语义分割来说,缩小32倍太小了。

- 通过移除最后一个(或者两个)块中的步幅并相应的应用空洞卷积,采用out stride=16(或者8)进行更加密集的特征提取。

- 同时,Deeplabv3增强了ASPP模块, 该模块通过以不同rate应用具有图像级别特征的atrous卷积来探测多尺度的卷积特征。

Proposed Decoder

对于解码器部分,直接将编码器的输出上采样4倍,使其分辨率和低层级的feature一致。举个例子,如果采用resnet conv2 输出的feature,则这里要x4上采样。将两种feature连接后,再进行一次3x3的卷积(细化作用),然后再次上采样就得到了像素级的预测。

实验结果表明,这种结构在Stride=16时有很高的精度速度又很快。stride=8相对来说只获得了一点点精度的提升,但增加了很多的计算量。

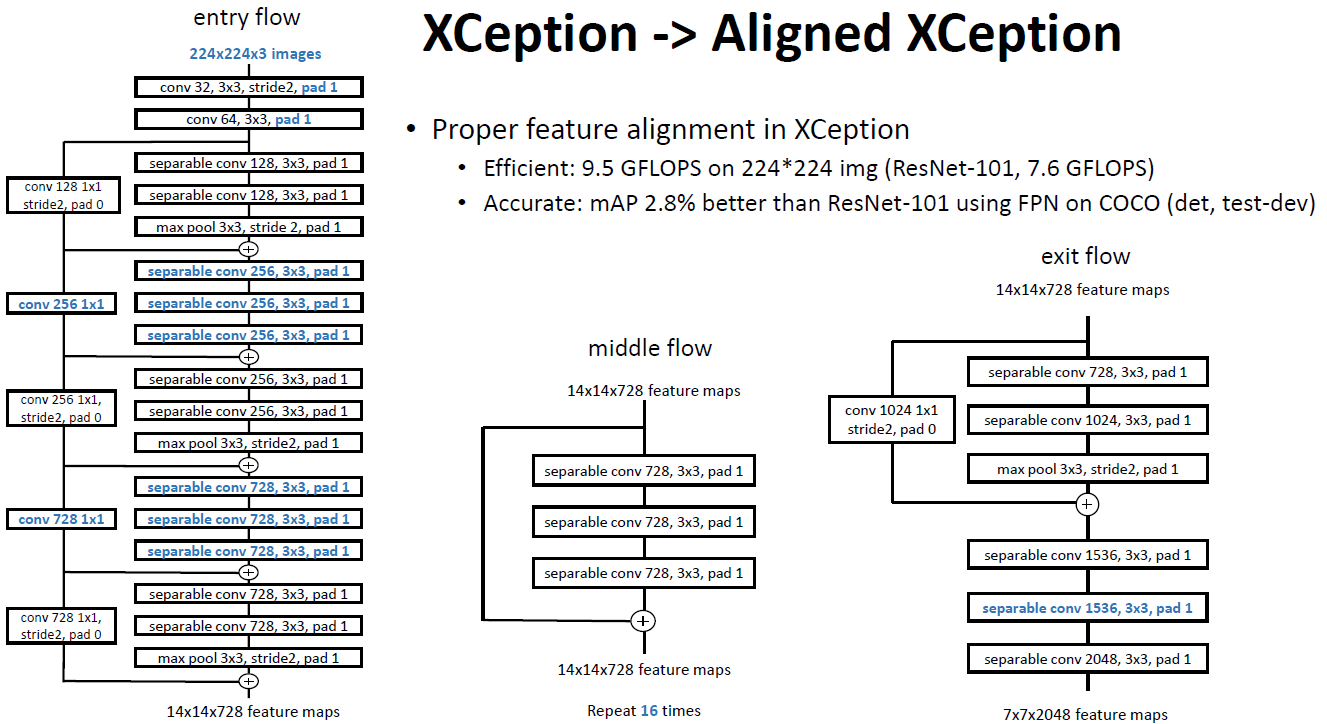

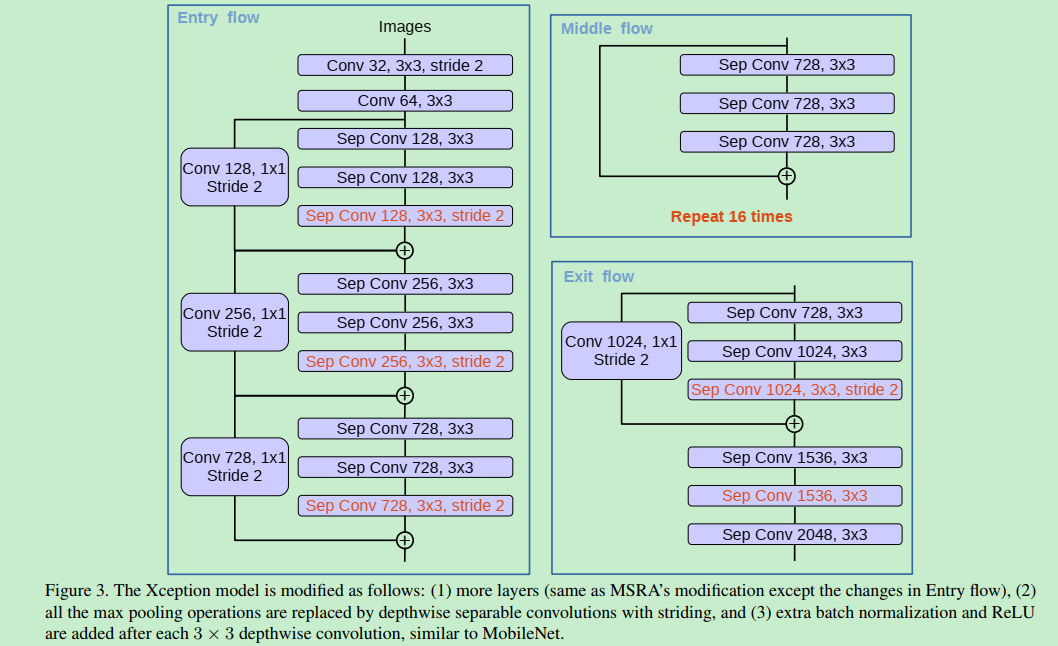

Modified Aligned Xception

Aligned Xception

- Xception 是用于图像分类任务的

- Aligned Xception 是来自可变形卷积,用于目标检测。

Modified Aligned Xception

论文受到近期MSRA组在Xception上改进工作可变形卷积(Deformable-ConvNets)启发,Deformable-ConvNets对Xception做了改进,能够进一步提升模型学习能力,新的结构如下:

- 更深的Xception结构,不同的地方在于不修改entry flow network的结构,为了快速计算和有效的使用内存

- 所有的max pooling结构被stride=2的深度可分离卷积代替

- 每个3x3的depthwise convolution都跟BN和Relu

最后将改进后的Xception作为encodet主干网络,替换原本DeepLabv3的ResNet101。

Ablation Study

Decoder Design

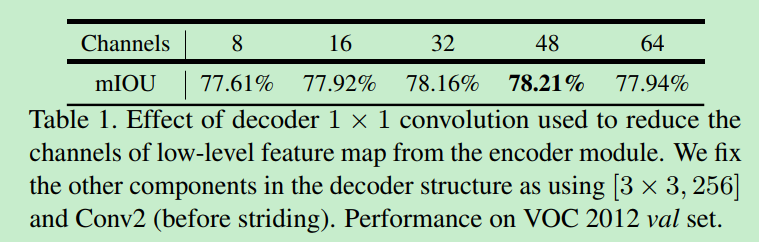

论文使用modified aligned Xception改进后的ResNet-101,在ImageNet-1K上做预训练,通过扩张卷积做密集的特征提取。采用DeepLabv3的训练方式(poly学习策略,crop 513x513)。注意在decoder模块同样包含BN层。

为了评估在低级特征使用1*1卷积降维到固定维度的性能,做了如下对比实验:

实验中取了conv2尺度为[3x3, 256]的输出,降维后的通道数在32和48之间最佳,最终选择了48。

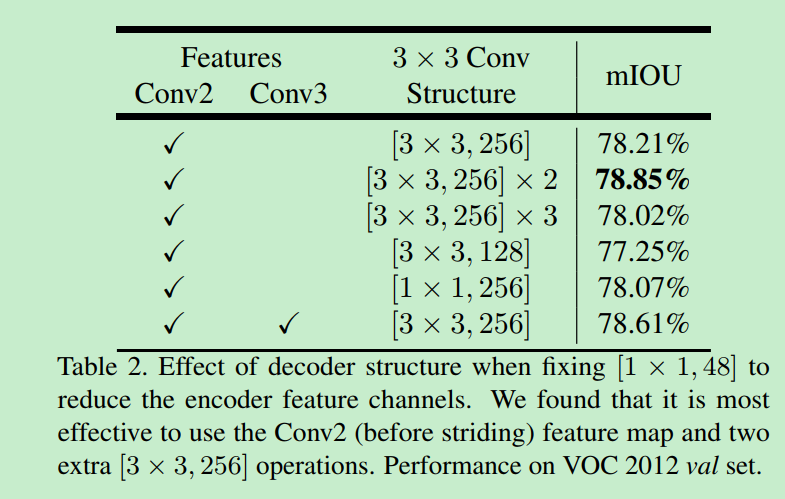

编解码特征图融合后经过了3x3卷积,论文探索了这个卷积的不同结构对结果的影响

And it is most effective to use the Conv2 (before striding) feature map and two extra [3×3 conv; 256 channels] operations.

最终,选择了使用两组3x3卷积。这个表格的最后一项代表实验了如果使用Conv2和Conv3同时预测,上采样2倍后与Conv3结合,再上采样2倍的结果对比,这并没有提升显著的提升性能,考虑到计算资源的限制,论文最终采样简单的decoder方案,即我们看到的DeepLabV3+的网络结构图。

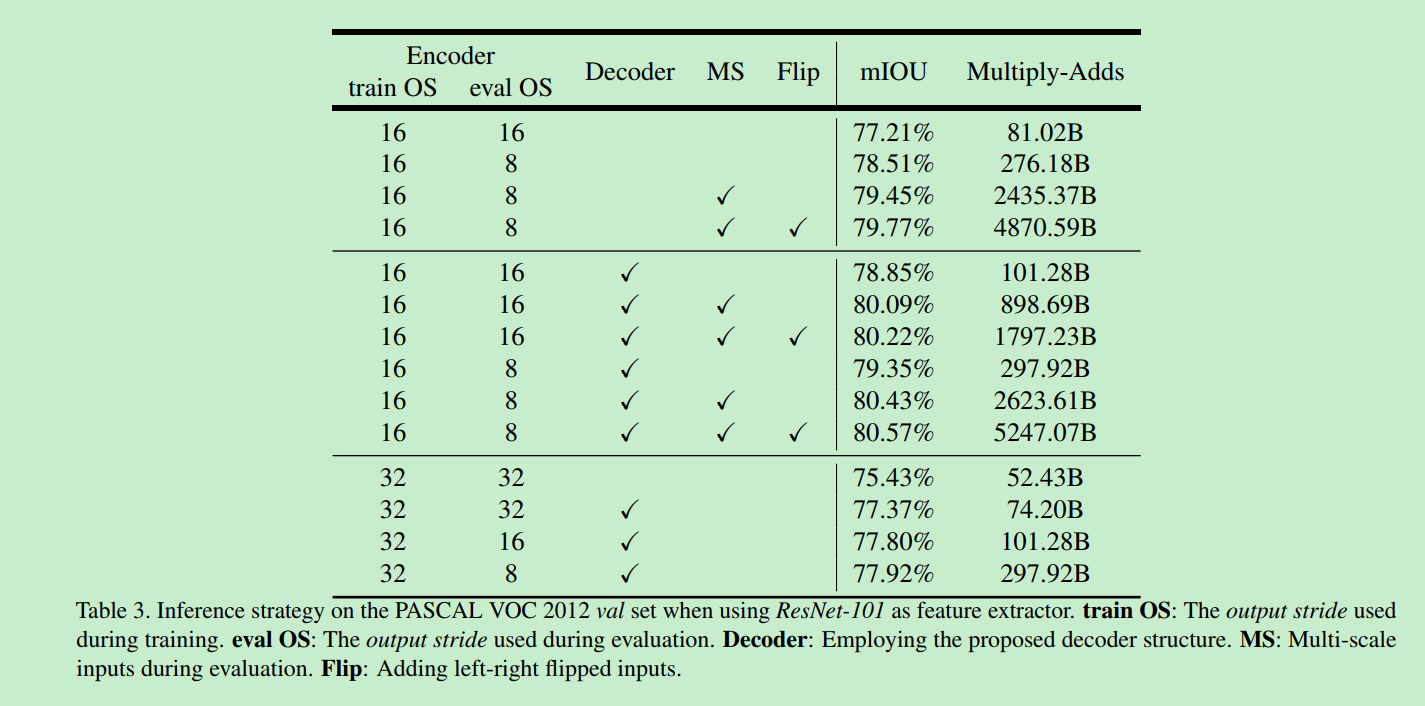

Model Variants with ResNet as Backbone

- Baseline (First row block): 77.21% to 79.77% mIOU

- With Decoder (Second row block): The performance is improved from 77.21% to 78.85% or 78.51% to 79.35%.

- The performance is further improved to 80.57% when using multi-scale and left-right flipped inputs.

- Coarser feature maps (Third row block): i.e. stride = 32, the performance is not good.

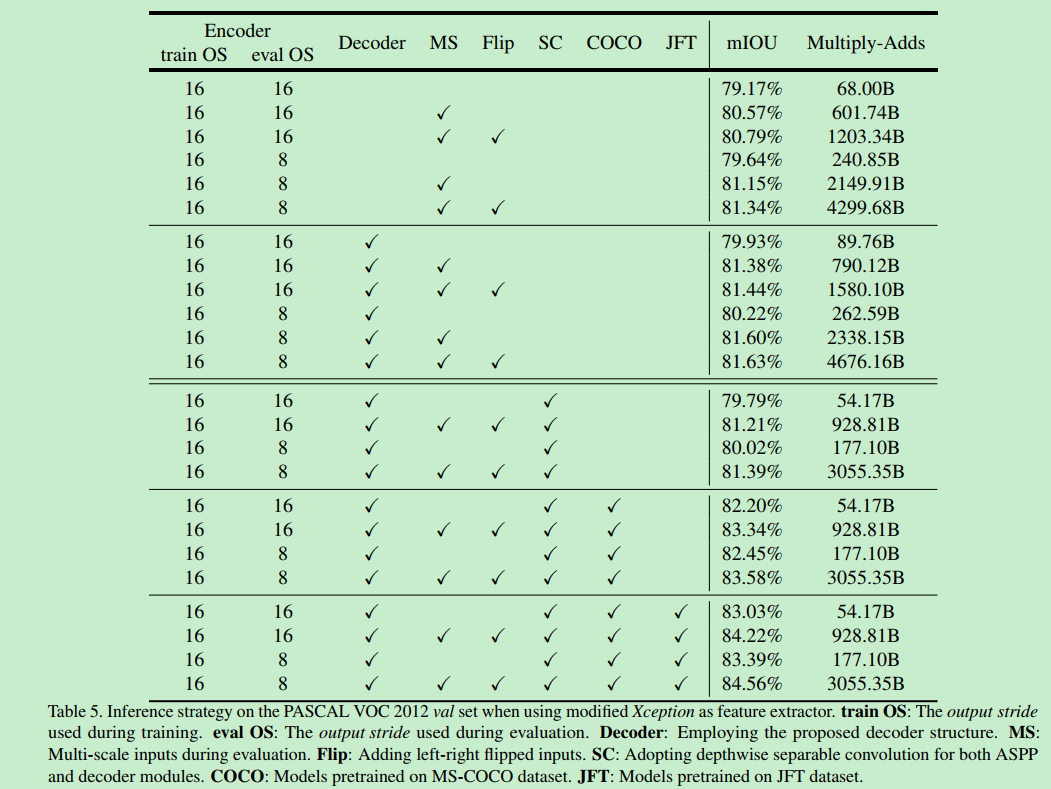

Modified Aligned Xception as Backbone

- Baseline (First row block): 79.17% to 81.34% mIOU.

- With Decoder (Second row block): 79.93% to 81.63% mIOU.

- Using Depthwise Separable Convolution (Third row block): Multiply-Adds is significantly reduced by 33% to 41%, while similar mIOU performance is obtained.

- Pretraining on COCO (Fourth row block): Extra 2% improvement.

- Pretraining on JFT (Fifth row block): Extra 0.8% to 1% improvement.

这里可以看到使用深度分离卷积可以显著降低计算消耗。

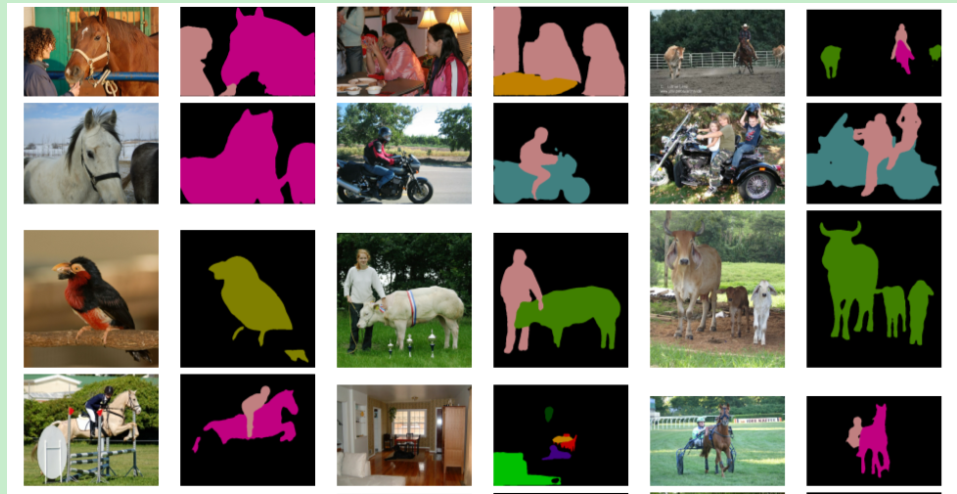

Visualization

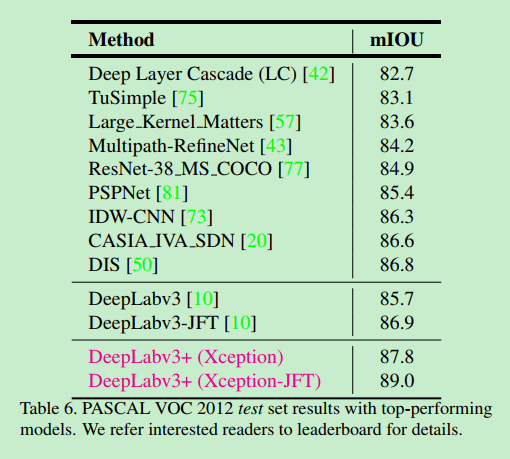

Comparison with State-of-the-art Approaches

DeepLabv3+ outperforms many SOTA approaches .

论文提出的DeepLabv3+是encoder-decoder架构,其中encoder架构采用Deeplabv3,decoder采用一个简单却有效的模块用于恢复目标边界细节。并可使用空洞卷积在指定计算资源下控制feature的分辨率。论文探索了Xception和深度分离卷积在模型上的使用,进一步提高模型的速度和性能。模型在VOC2012上获得了SOAT。Google出品,必出精品,这网络真的牛。

源码分析

github:https://github.com/jfzhang95/pytorch-deeplab-xception.git

1 | """ |

ASPP:

1 | import math |

Decoder:编解码器

1 | import math |